My experience working as an operational DBA is that a significant

portion of time is spent on time consuming repetitive requests from the end users or

necessary maintenance work like monitoring space usage and remedying issues

with limited space.

If the DBAs are

lucky and are sent on training from time to time, they may have the opportunity

to learn about interesting topics like performance tuning or troubleshooting

performance issues. My experience with

this is that you can go off and learn all sorts of tips and tricks about

performance optimization and get back to work with intentions of utilizing your

new or refreshed skills and proactively tuning your environment. However, you get back to work and the steady

stream of day to day requests such as security provisioning returns and your best

intentions once again have to take a back seat.

The topic of using Windows/AD groups for the purposes of SQL

Server security is not only a security best practice that is recommended by Microsoft

but also a very concrete action you can take to free up time to perform

additional proactive operations that improve the SQL Server environment that

you are responsible for.

In this blog, Ill summarize just a couple benefits of using

Windows/AD groups to make securing your SQL Server much more efficient and less

labor intensive.

Anyone who has attained any level of SQL Server

certification knows that learning the basics of SQL Server is required

learning. Two of the first things you

learn about is the need for creating users and logins in SQL Server and time is

spent learning the difference.

In order

to even connect to a SQL Server instance you need to have a login to

authenticate your credentials. That

login is mapped to a user object and from there privileges and permissions are

provisioned as necessary. Sounds simple

right? It is but it can get a lot more complicated due to the amount of

work it would take to administer all of these objects if we created logins and

user objects for each individual user and for each application context.

The basis of this blog is that Windows authentication should

be used to leverage the security of a trusted domain in a Windows network

environment. Benefits also include being able

to use the features such as password complexity and expiration requirements

that are administered by the domain administrators.

When defining a login it is good idea to use an Windows/AD group

such as the following:

USE [master]

GO

CREATE LOGIN [DOMAIN\ADGroup] FROM

WINDOWS

WITH

DEFAULT_DATABASE=[Adventureworks2012];

Then you can create a user object for this login:

USE [Adventureworks2012]

GO

CREATE USER [DOMAIN\ADGroup] FOR LOGIN [DOMAIN\ADGroup]

WITH DEFAULT_SCHEMA=[dbo];

EXEC sp_addrolemember N'db_datareader', N'DOMAIN\ADGroup';

GO

CREATE USER [DOMAIN\ADGroup] FOR LOGIN [DOMAIN\ADGroup]

WITH DEFAULT_SCHEMA=[dbo];

EXEC sp_addrolemember N'db_datareader', N'DOMAIN\ADGroup';

A very real benefit from a security view is that now

connection strings from applications to the SQL Server instance do not need to

include vulnerable passwords. If the

domain is a trusted domain then a connection string such as the following will

be much more secure.

"Driver={SQLServer};Server=SERVERNAME;Database=Adventureworks2012;Trusted_Connection=yes;"

The biggest time saving

benefit is now when a new employee is hired or when an employee leaves, the

domain administrator will just have to include or exclude them from the Windows/AD

group and because the login is created with the Windows/AD group the user will

automatically be included or excluded from the SQL Server security model. No more adding or deleting individual logins

and users and also the risk of creating orphaned users is reduced as well.

A lesser known benefit of using Windows/AD groups for logins is that

the ability to audit on a granular level is not lost. For example, a user is authenticated by

signing on to their trusted domain. The Windows/AD group is mapped to a login. When a

SQL Server user who is mapped to that login performs DDL or DML their actions

are logged as their Windows account. If

you were to execute SP_WHO2 you would see their own personal Windows

account ie: Domain\UserName.

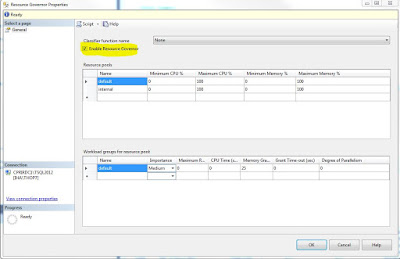

This illustration shows the various level of security from a

Windows level to a Database level.

Example: Permissions Hierarchy (MSDN)

By

creating a Windows/AD group and a server level role and a database level user

the security model becomes very robust.

Thanks for reading,

SQLCANUCK